Loki: jobs and internships

If you would like to work with us, but none of the offers below match wich your qualification, your expertise or your expectations, you can contact Géry Casiez (gery.casiez@univ-lille.fr) to discuss other possible opportunities for joining the team.Postdoc

PhDs

While soft robotic manipulators are intrinsically safer than rigid manipulators, they may be perceived as threats due to diminished movement legibility, increased risks of movement singularities, and their animal-like appearances. This project leverages virtual reality (VR) setups and methods from speculative design to study perceived safety when users are immersed in interactive tasks with virtual realistic plant-like and animal-like soft robots. We posit the aesthetic of soft robots has a strong impact on this factor and it may have been overlooked.

see the offer...

contacts: Sylvain Malacria (sylvain.malacria@inria.fr)

Every year, an increasing number of presentation materials, such as presentation slide decks or posters, are produced to be delivered at various events. The delivery of these presentation materials is essential for sharing companies agendas or scientific results. The materials are typically the result of a collaborative design process that aggregates data sometimes produced by several users, iteratively refined, for then being delivered by one or several presenters. Indeed, during the preliminary work, valuable information embedded in inherent materials (data sets, notebooks, interview transcripts, source code) or visual materials (keynote slide decks, graphics materials, demonstrators, videos) must be gathered. Ultimately, the final presentation material is the result of a collaborative work that requires coordination and adaptation. This PhD will explore the design of interactive systems that will facilitate the collaborative creation of presentation materials, notably for research, focusing on slide-deck-based and/or poster presentations.

see the offer...

Internships

Master level

Continuum parallel robots (CPRs) are parallel assemblies of flexible legs joined at a single end-effector platform that potentially overcome limitations in accuracy, stiffness, payload, and rapidity of other robot types (e.g., continuum serial manipulators). They may however suffer from singular configurations where they may become elastically unstable and therefore difficult to control, leading to potentially threatening physical behaviors for humans around. This internship focuses on the human perception of singularities and studies what factors make them threatening in specific scenarios, or on the contrary as aesthetic.

see the offer...

Master level

Graphical user interfaces (GUIs) can be tailored by end-users to better match their needs and preferences, and better adapt to their tasks. While this might be beneficial by increasing productivity for certain tasks, it can also be detrimental if layouts of commands break design logics and are changed too often. We propose to study the impact of frequent changes on the learning of interface features through controlled user studies on memorization.

see the offer...

Master level

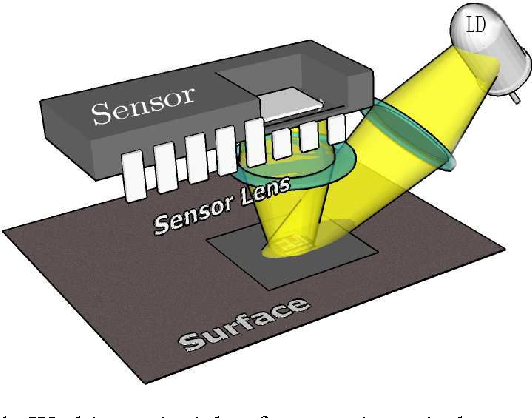

Current systems are unable to determine the resolution of a computer mouse from classical events ("MouseEvents") or accessible system properties. The objective of this project is to develop a learning technique that allows to determine this resolution from the raw information sent by the mouse.

see the offer...

Master level

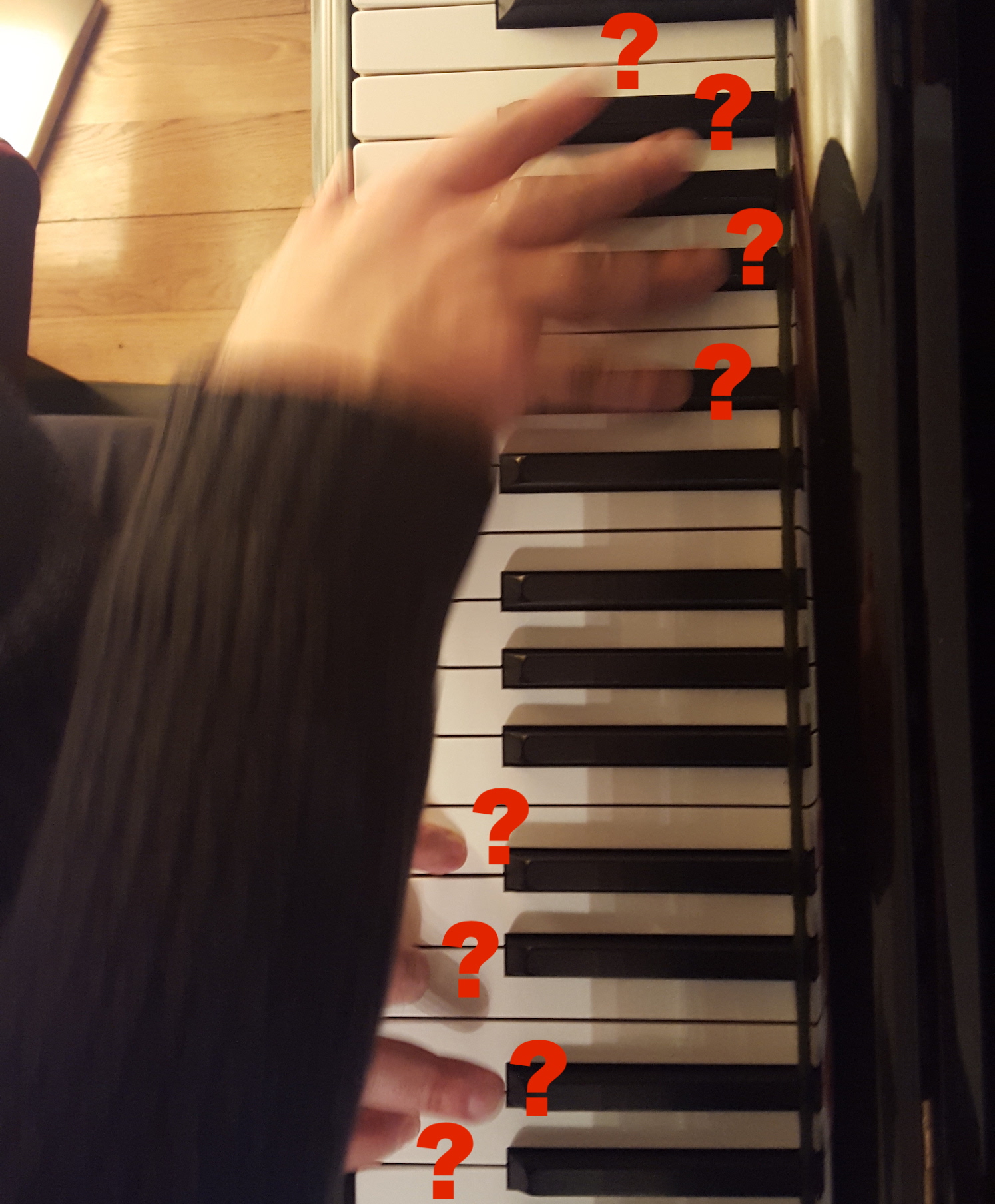

As part of their practice, pianists (professional or amateur) need to annotate the scores they are working on with information on how they will interpret them. In particular, they have to note the fingerings they will apply - i.e. which finger plays which note on which key on the keyboard. It is a long and tedious job, as it cannot be automated. Although there are conventional playing techniques and fingerings, the pianist's technical level, his or her particularities and personal interpretation will result in varying fingerings from one person to another. Pianists must therefore, during their working session, play the piece, determine the "right" fingerings and constantly go back and forth to annote them on the score, before to possibly transcribe them on a digital score using dedicated software. The purpose of this project is to conduct robustness and reliability tests on a first hardware and software prototype that allows the automatic and real-time detection of fingerings performed on a piano. These tests will improve the system, the objective being to make it easier for pianists to annotate fingerings on their scores and to help practice these fingerings as part of piano practice or teaching. Based on this prototype, interactions and visualization techniques for these applications can also be studied during the internship.

see the offer...

Master level

“Interaction interferences” are a family of usability problems defined by a sudden and unexpected change in an interface, which occurs when the user was about to perform an action, and too late for him/her to interrupt it. They can cause effects ranging from frustration to loss of data. For example, a user is about to click on a hyperlink on his phone, but just before the ‘tap’ a pop-up window appears above the link and the user is unable to stop his action, resulting in the opening of an unwanted and possibly harmful webpage. Although quite frequent, there is currently no precise characterization or technical solutions to this family of problems.

see the offer...

Master level

End-to-end latency, measured as the time elapsed between a user action on an input device and the update of visual, auditory or haptic information is known to deteriorate user perception and performance. The synchronization between visual and haptic feedback is also known to be important for perception. While tools are now available to measure and determine the origin of latency on visual displays, a lot remains to be done for haptic actuators. In previous work, we designed a latency measurement tool, and used it to measure the latency of visual interfaces. Our results showed that with a minimal application, most of the latency comes from the visual rendering pipeline.

While visual systems are designed to run at 60Hz, haptic systems can run at much higher frequencies up to 1000Hz. This means the bottleneck of haptic interfaces might be different from visual interfaces. There might also be large differences between different kind of haptic actuators, some of them being designed to be highly responsive. Moreover, the perception of temporal parameters of haptic stimulations is different than for visual stimulations. We foresee influences on the perception of latency between haptic and visual interfaces.

see the offer...

While visual systems are designed to run at 60Hz, haptic systems can run at much higher frequencies up to 1000Hz. This means the bottleneck of haptic interfaces might be different from visual interfaces. There might also be large differences between different kind of haptic actuators, some of them being designed to be highly responsive. Moreover, the perception of temporal parameters of haptic stimulations is different than for visual stimulations. We foresee influences on the perception of latency between haptic and visual interfaces.

Master level

contacts: Mathieu Nancel (mathieu.nancel@inria.fr)

This project consists in exploring dynamic models from the motion science and neuroscience literature, in order to validate their applicability for the automatic or semi-automatic design of acceleration functions for the mouse or touchpad. These results will make it possible to define more effective methods for designing acceleration functions for general public applications (default functions in OSs, acceleration adapted for a given person or task), advanced applications (gaming, art), or even for the assistance of motor disabilities.

see the offer...

Licence/Master level

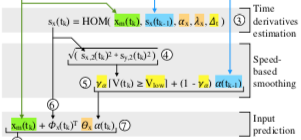

The total, or "end-to-end" latency of an interactive system comes from many software and hardware sources that may be incompressible - in today's state of the art. An alternative solution, and one that is immediately available, is to predict the user's movements in the near future, in order to represent on the screen a return corresponding to the user's actual position, rather than to his last captured position. Naturally, the amount of latency that can be compensated for depends on the quality of the prediction.

The objective of this project is to work either on the dynamic adjustment of the prediction time distance or to develop new prediction methods specifically for augmented reality (AR).

see the offer...

The objective of this project is to work either on the dynamic adjustment of the prediction time distance or to develop new prediction methods specifically for augmented reality (AR).